This month, Jeremy Howard, a man-made intelligence researcher, launched a web-based chat bot referred to as ChatGPT to his 7-year-old daughter. It had been launched a couple of days earlier by OpenAI, one of many world’s most bold A.I. labs.

He informed her to ask the experimental chat bot no matter got here to thoughts. She requested what trigonometry was good for, the place black holes got here from and why chickens incubated their eggs. Every time, it answered in clear, well-punctuated prose. When she requested for a pc program that might predict the trail of a ball thrown by way of the air, it gave her that, too.

Over the subsequent few days, Dr. Howard — an information scientist and professor whose work impressed the creation of ChatGPT and related applied sciences — got here to see the chat bot as a brand new type of private tutor. It may educate his daughter math, science and English, to not point out a couple of different vital classes. Chief amongst them: Don’t imagine every thing you might be informed.

“It’s a thrill to see her be taught like this,” he stated. “However I additionally informed her: Don’t belief every thing it provides you. It might probably make errors.”

OpenAI is among the many many firms, tutorial labs and unbiased researchers working to construct extra superior chat bots. These techniques can’t precisely chat like a human, however they typically appear to. They’ll additionally retrieve and repackage data with a pace that people by no means may. They are often considered digital assistants — like Siri or Alexa — which are higher at understanding what you might be in search of and giving it to you.

After the discharge of ChatGPT — which has been utilized by greater than one million folks — many specialists imagine these new chat bots are poised to reinvent and even substitute web search engines like google like Google and Bing.

They’ll serve up data in tight sentences, somewhat than lengthy lists of blue hyperlinks. They clarify ideas in ways in which folks can perceive. And so they can ship info, whereas additionally producing enterprise plans, time period paper subjects and different new concepts from scratch.

“You now have a pc that may reply any query in a approach that is smart to a human,” stated Aaron Levie, chief government of a Silicon Valley firm, Field, and one of many many executives exploring the methods these chat bots will change the technological panorama. “It might probably extrapolate and take concepts from completely different contexts and merge them collectively.”

The brand new chat bots do that with what looks as if full confidence. However they don’t all the time inform the reality. Typically, they even fail at easy arithmetic. They mix truth with fiction. And as they proceed to enhance, folks may use them to generate and unfold untruths.

The Unfold of Misinformation and Falsehoods

Google lately constructed a system particularly for dialog, referred to as LaMDA, or Language Mannequin for Dialogue Purposes. This spring, a Google engineer claimed it was sentient. It was not, however it captured the general public’s creativeness.

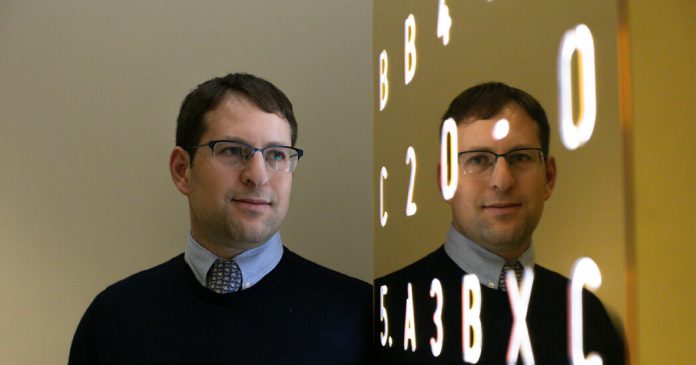

Aaron Margolis, an information scientist in Arlington, Va., was among the many restricted variety of folks exterior Google who have been allowed to make use of LaMDA by way of an experimental Google app, AI Check Kitchen. He was persistently amazed by its expertise for open-ended dialog. It saved him entertained. However he warned that it might be a little bit of a fabulist — as was to be anticipated from a system educated from huge quantities of data posted to the web.

“What it provides you is type of like an Aaron Sorkin film,” he stated. Mr. Sorkin wrote “The Social Community,” a film typically criticized for stretching the reality in regards to the origin of Fb. “Components of it will likely be true, and elements is not going to be true.”

He lately requested each LaMDA and ChatGPT to talk with him as if it have been Mark Twain. When he requested LaMDA, it quickly described a gathering between Twain and Levi Strauss, and stated the author had labored for the bluejeans mogul whereas residing in San Francisco within the mid-1800s. It appeared true. However it was not. Twain and Strauss lived in San Francisco on the similar time, however they by no means labored collectively.

Scientists name that downside “hallucination.” Very similar to a superb storyteller, chat bots have a approach of taking what they’ve discovered and reshaping it into one thing new — with no regard for whether or not it’s true.

LaMDA is what synthetic intelligence researchers name a neural community, a mathematical system loosely modeled on the community of neurons within the mind. This is similar expertise that interprets between French and English on providers like Google Translate and identifies pedestrians as self-driving vehicles navigate metropolis streets.

A neural community learns abilities by analyzing information. By pinpointing patterns in hundreds of cat photographs, for instance, it might probably be taught to acknowledge a cat.

5 years in the past, researchers at Google and labs like OpenAI began designing neural networks that analyzed huge quantities of digital textual content, together with books, Wikipedia articles, information tales and on-line chat logs. Scientists name them “massive language fashions.” Figuring out billions of distinct patterns in the way in which folks join phrases, numbers and symbols, these techniques discovered to generate textual content on their very own.

Their skill to generate language shocked many researchers within the area, together with lots of the researchers who constructed them. The expertise may mimic what folks had written and mix disparate ideas. You might ask it to write down a “Seinfeld” scene through which Jerry learns an esoteric mathematical method referred to as a bubble kind algorithm — and it would.

With ChatGPT, OpenAI has labored to refine the expertise. It doesn’t do free-flowing dialog in addition to Google’s LaMDA. It was designed to function extra like Siri, Alexa and different digital assistants. Like LaMDA, ChatGPT was educated on a sea of digital textual content culled from the web.

As folks examined the system, it requested them to price its responses. Have been they convincing? Have been they helpful? Have been they truthful? Then, by way of a way referred to as reinforcement studying, it used the rankings to hone the system and extra fastidiously outline what it could and wouldn’t do.

“This permits us to get to the purpose the place the mannequin can work together with you and admit when it’s unsuitable,” stated Mira Murati, OpenAI’s chief expertise officer. “It might probably reject one thing that’s inappropriate, and it might probably problem a query or a premise that’s incorrect.”

The tactic was not good. OpenAI warned these utilizing ChatGPT that it “could often generate incorrect data” and “produce dangerous directions or biased content material.” However the firm plans to proceed refining the expertise, and reminds folks utilizing it that it’s nonetheless a analysis mission.

Google, Meta and different firms are additionally addressing accuracy points. Meta lately eliminated a web-based preview of its chat bot, Galactica, as a result of it repeatedly generated incorrect and biased data.

Consultants have warned that firms don’t management the destiny of those applied sciences. Methods like ChatGPT, LaMDA and Galactica are primarily based on concepts, analysis papers and laptop code which have circulated freely for years.

Firms like Google and OpenAI can push the expertise ahead at a quicker price than others. However their newest applied sciences have been reproduced and broadly distributed. They can not forestall folks from utilizing these techniques to unfold misinformation.

Simply as Dr. Howard hoped that his daughter would be taught to not belief every thing she learn on the web, he hoped society would be taught the identical lesson.

“You might program thousands and thousands of those bots to look like people, having conversations designed to persuade folks of a selected perspective” he stated. “I’ve warned about this for years. Now it’s apparent that that is simply ready to occur.”